Model Selection in Mixture of Experts Models

Sep 22, 2020

TrungTin Nguyen

Postdoctoral Research Fellow

A central theme of my research is data science at the intersection of statistical learning, machine learning and optimization.

Publications

A non-asymptotic risk bound for model selection in high-dimensional mixture of experts via joint rank and variable selection

We are motivated by the problem of identifying potentially nonlinear regression relationships between high-dimensional outputs and …

A non-asymptotic approach for model selection via penalization in high-dimensional mixture of experts

Mixture of experts (MoE) are a popular class of statistical and machine learning models that have gained attention over the years due …

Model selection by penalization in mixture of experts models with a non-asymptotic approach

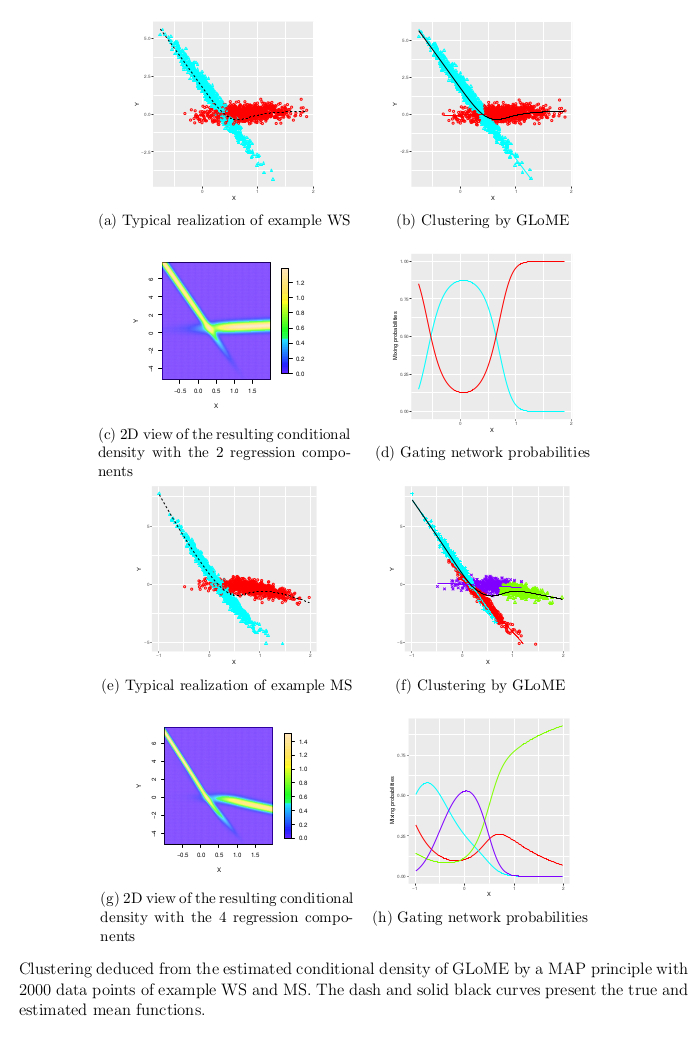

This study is devoted to the problem of model selection among a collection of Gaussian-gated localized mixtures of experts models …

Model Selection and Approximation in High-dimensional Mixtures of Experts Models: from Theory to Practice

Mixtures of experts (MoE) models are a ubiquitous tool for the analysis of heterogeneous data across many fields including statistics, …

A non-asymptotic model selection in block-diagonal mixture of polynomial experts models

Model selection via penalized likelihood type criteria is a standard task in many statistical inference and machine learning problems. It has led to deriving criteria with asymptotic consistency results and an increasing emphasis on introducing non-asymptotic criteria. We focus on the problem of modeling non-linear relationships in regression data with potential hidden graph-structured interactions between the high-dimensional predictors, within the mixture of experts modeling framework. In order to deal with such a complex situation, we investigate a block-diagonal localized mixture of polynomial experts (BLoMPE) regression model, which is constructed upon an inverse regression and block-diagonal structures of the Gaussian expert covariance matrices. We introduce a penalized maximum likelihood selection criterion to estimate the unknown conditional density of the regression model. This model selection criterion allows us to handle the challenging problem of inferring the number of mixture components, the degree of polynomial mean functions, and the hidden block-diagonal structures of the covariance matrices, which reduces the number of parameters to be estimated and leads to a trade-off between complexity and sparsity in the model. In particular, we provide a strong theoretical guarantee$:$ a finite-sample oracle inequality satisfied by the penalized maximum likelihood estimator with a Jensen-Kullback-Leibler type loss, to support the introduced non-asymptotic model selection criterion. The penalty shape of this criterion depends on the complexity of the considered random subcollection of BLoMPE models, including the relevant graph structures, the degree of polynomial mean functions, and the number of mixture components.

An l1-oracle inequality for the Lasso in mixture-of-experts regression models

Mixture-of-experts (MoE) models are a popular framework for modeling heterogeneity in data, for both regression and classification problems in statistics and machine learning, due to their flexibility and the abundance of statistical estimation and model choice tools. Such flexibility comes from allowing the mixture weights (or gating functions) in the MoE model to depend on the explanatory variables, along with the experts (or component densities). This permits the modeling of data arising from more complex data generating processes, compared to the classical finite mixtures and finite mixtures of regression models, whose mixing parameters are independent of the covariates. The use of MoE models in a high-dimensional setting, when the number of explanatory variables can be much larger than the sample size (i.e., $p \gg n)$, is challenging from a computational point of view, and in particular from a theoretical point of view, where the literature is still lacking results in dealing with the curse of dimensionality, in both the statistical estimation and feature selection. We consider the finite mixture-of-experts model with soft-max gating functions and Gaussian experts for high-dimensional regression on heterogeneous data, and its $l_1$-regularized estimation via the Lasso. We focus on the Lasso estimation properties rather than its feature selection properties. We provide a lower bound on the regularization parameter of the Lasso function that ensures an $l_1$-oracle inequality satisfied by the Lasso estimator according to the Kullback-Leibler loss.

Talks

Model selection by penalization in mixture of experts models with a non-asymptotic approach.

This study is devoted to the problem of model selection among a collection of Gaussian-gated localized mixtures of experts models …

Jun 13, 2022 2:00 PM — Jun 17, 2022 4:00 PM

Université Claude Bernard Lyon 1, France

A non-asymptotic approach for model selection via penalization in high-dimensional mixture of experts models.

Mixture of experts (MoE) are a popular class of statistical and machine learning models that have gained attention over the years due …

May 11, 2022 2:00 PM — 4:00 PM

Vietnam Institute for Advanced Study in Mathematics, Vietnam

A non-asymptotic approach for model selection via penalization in mixture of experts models

Mixture of experts (MoE), originally introduced as a neural network, is a popular class of statistical and machine learning models that …

Apr 4, 2022 9:00 AM — Apr 8, 2022 6:00 PM

Institut d'Etudes Scientifiques de Cargèse, Corsica

A non-asymptotic model selection in mixture of experts models

Mixture of experts (MoE), originally introduced as a neural network, is a popular class of statistical and machine learning models that …

Mar 18, 2022 11:00 AM — 12:00 PM

ENSAI École Nationale de Statistique et Analyse de l'Information, France

Model Selection and Approximation in High-dimensional Mixtures of Experts Models$:$ From Theory to Practice

Mixtures of experts (MoE) models are a ubiquitous tool for the analysis of heterogeneous data across many fields including statistics, …

Dec 14, 2021 1:30 PM — 6:00 PM

Laboratoire de Mathématiques Nicolas Oresme, Université de Caen Normandie, France

Model Selection and Approximation in High-dimensional Mixtures of Experts Models From Theory to Practice

Mixtures of experts (MoE) models are a ubiquitous tool for the analysis of heterogeneous data across many fields including statistics, …

Oct 29, 2021 9:00 AM — 6:00 PM

Laboratoire d'Ondes et Milieux Complexes, Le Havre, France

Approximation and non-asymptotic model selection in mixture of experts models

Mixtures of experts (MoE) models are a ubiquitous tool for the analysis of heterogeneous data across many fields including statistics, …

Sep 30, 2021 9:00 AM — Sep 29, 2021 5:00 PM

INSA Rouen Normandie, Rouen, France

A non-asymptotic model selection in mixture of experts models

Mixture of experts (MoE) is a popular class of models in statistics and machine learning that has sustained attention over the years, …

Jun 2, 2021 — Jun 4, 2021

Institut de Mathématique d'Orsay, Paris, France

Non-asymptotic penalization criteria for model selection in mixture of experts models

Mixture of experts (MoE) is a popular class of models in statistics and machine learning that has sustained attention over the years, …

Apr 8, 2021 9:00 AM — Apr 9, 2021 3:00 PM

Laboratory of Mathematics Raphaël Salem (LMRS, UMR CNRS 6085)