A non-asymptotic approach for model selection via penalization in mixture of experts models

Image credit: TrungTin Nguyen

Image credit: TrungTin Nguyen

Abstract

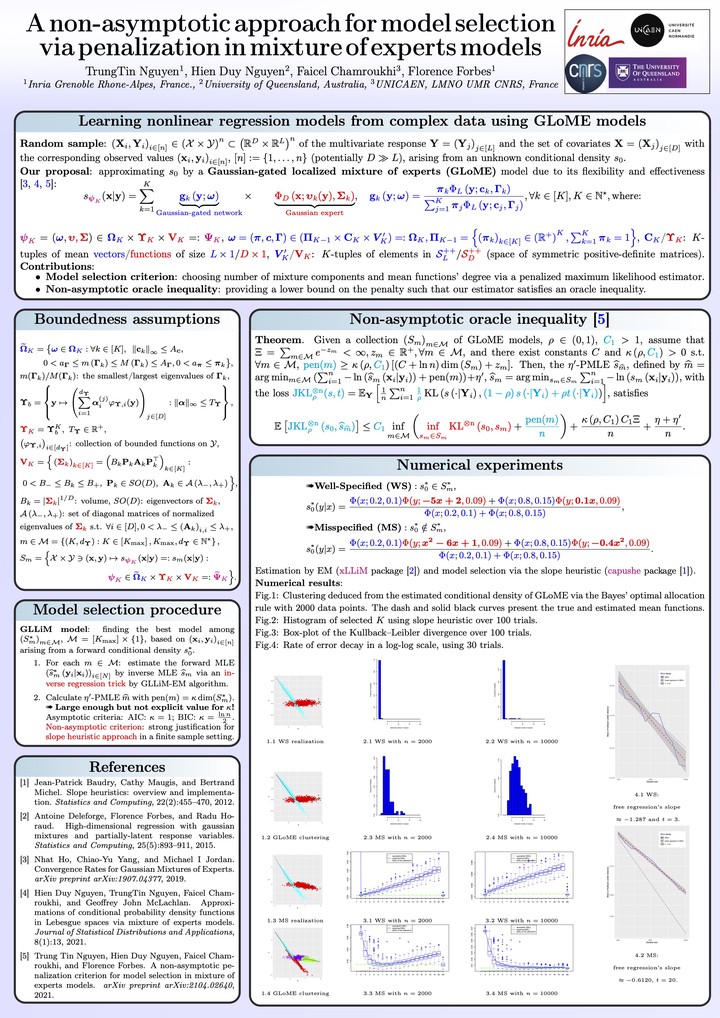

Mixture of experts (MoE), originally introduced as a neural network, is a popular class of statistical and machine learning models that has gained attention over the years due to its flexibility and efficiency. They provide conditional constructions for regression in which the mixture weights, along with the component densities, are explained by the predictors, allowing for flexibility in the modeling of data arising from complex data generating processes. We have shown in previous studies that such models had a good approximation ability provided the number of experts was large enough. More precisely, to an arbitrary degree of accuracy, given input and output variables are both compactly supported, we have provided denseness results in Lebesgue spaces for conditional probability density functions. In this work, we consider Gaussian-gated localized MoE (GLoME) and block-diagonal covariance localized MoE (BLoME) regression models to present nonlinear relationships in heterogeneous data with potential hidden graph-structured interactions between high-dimensional predictors. These models pose difficult questions in statistical estimation and model selection, both from a computational and theoretical perspective. This talk is devoted to the study of the problem of model selection among a collection of GLoME or BLoME models characterized by the number of mixture components, the complexity of Gaussian mean experts, and the hidden block-diagonal structures of the covariance matrices, in a penalized maximum likelihood estimation framework. In particular, we establish non-asymptotic risk bounds that take the form of weak oracle inequalities, provided that lower bounds of the penalties hold. Their good empirical behavior is then demonstrated on synthetic and real datasets.